Blog

Unlocking the Power of AI: How the Co-Lab is Exploring LLMs on Raspberry Pi

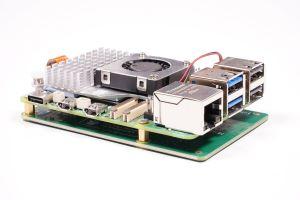

At the Co-Lab, we’re all about pushing the boundaries of what’s possible with technology. From building cutting-edge AI tools to experimenting with DIY hardware setups, innovation is our middle name. This time, we’re dipping our toes into the world of Large Language Models (LLMs)—those super-smart algorithms behind tools like ChatGPT. We're going to take a Large Language Model and run it on the not so the not so large Raspberry Pi. 🥧💡

Why? Because we believe AI should be accessible, customizable, and, let’s face it, a little bit fun. Keep reading to see how we’ve transformed this big tech buzzword into a hands-on project you can try yourself.

What Are LLMs, and Why Should You Care?

Think of LLMs as the multitool of the AI world. These models are trained on massive datasets of language and can do everything from writing poetry to answering trivia questions. They’re the brains behind chatbots, content generators, and even AI assistants.

But LLMs aren’t just for big tech companies with huge server farms. Thanks to platforms like Hugging Face and Ollama, you can now run these models yourself—even on a Raspberry Pi! Yes, that credit-card-sized computer sitting on your desk can be a mini AI powerhouse.

How We Made It Happen

Our recent project took a two-pronged approach to explore the potential of LLMs:

1. Online LLMs with Hugging Face

We kicked things off with Hugging Face, the go-to platform for pre-trained AI models. Using Python, we built a chatbot powered by SmolLM2-135M, a lightweight model that works like a charm on the Raspberry Pi. It’s quick, responsive, and surprisingly capable for such a small model.

With just a few lines of Python code, we created an interactive bot that could answer questions, tell jokes, and generate short stories—all in real time.

2. Offline LLMs with Ollama

Next, we took things offline with Ollama, a tool that lets you run AI models locally. Why is this cool? Because it means no internet required. Your data stays private, and you can use AI anywhere—whether you’re in a coffee shop or a cabin in the woods.

We downloaded the smollm:135m model and set it up to generate text on the Raspberry Pi. To make it even cooler, we added real-time streaming output so responses appeared instantly as they were generated.

What's cool about This Project

This project isn’t just about building something cool (though that’s a big part of it). It’s about demonstrating how AI can be:

- Accessible: You don’t need a supercomputer to get started. A Raspberry Pi is all it takes.

- Private: Offline models ensure your data stays yours.

- Fun: Chatbots, AI assistants, and smart tools—all powered by you!

And the best part? These concepts can be applied to so many areas, from smart home devices to educational tools.

Want to Try It Yourself?

We’ve put together a complete async lesson that walks you through the entire process—from installing the tools to running your first chatbot. Whether you’re a seasoned developer or a curious beginner, this guide is perfect for anyone who wants to explore the world of LLMs.

What’s Next at the Co-Lab?

This project is just the tip of the iceberg. At the Co-Lab, we’re always exploring and experimenting with new technologies and we encourage you to visit us and explore with us! Want to stay in the loop? Follow us for updates on our latest projects, tutorials, and events. And if you’ve got an idea for an AI-powered project, we’d love to hear from you!

Let’s build the future—one Raspberry Pi at a time. 🚀